Introduction

Chatbots have transformed the way we engage with technology, enabling automated, intelligent conversations across various domains. Building these chat systems can be challenging, especially when aiming for flexibility and scalability. AutoGen simplifies this process by leveraging AI agents, which handle complex dialogues and tasks autonomously. In this article, we’ll explore how to build agentic chatbots using AutoGen. We will explore its powerful agent-based framework that makes creating adaptive, intelligent conversational bots easier than ever.

Overview

- Learn what the AutoGen framework is all about and what it can do.

- See how you can create chatbots that can hold discussions with each other, respond to human queries, search the web, and do even more.

- Know the setup requirements and prerequisites needed for building agentic chatbots using AutoGen.

- Learn how to enhance chatbots by integrating tools like Tavily for web searches.

What is AutoGen?

In AutoGen, all interactions are modelled as conversations between agents. This agent-to-agent, chat-based communication streamlines the workflow, making it intuitive to start building chatbots. The framework also offers flexibility by supporting various conversation patterns such as sequential chats, group chats, and more.

Let’s explore the AutoGen chatbot capabilities as we build different types of chatbots:

- Dialectic between agents: Two experts in a field discuss a topic and try to resolve their contradictions.

- Interview preparation chatbot: We will use an agent to prepare for the interview by asking questions and evaluating the answers.

- Chat with Web search tool: We can chat with a search tool to get any information from the web.

Learn More: Autogen: Exploring the Basics of a Multi-Agent Framework

Prerequisites

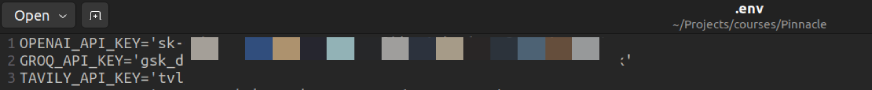

Before building AutoGen agents, ensure you have the necessary API keys for LLMs. We will also use Tavily to search the web.

Accessing via API

In this article, we are using OpenAI and Groq API keys. Groq offers access to many open-source LLMs for free up to some rate limits.

We can use any LLM we prefer. Start by generating an API key for the LLM and Tavily search tool.

Create a .env file to securely store this key, keeping it private while making it easily accessible within your project.

Libraries Required

autogen-agentchat – 0.2.36

tavily-python – 0.5.0

groq – 0.7.0

openai – 1.46.0

Dialectic Between Agents

Dialectic is a method of argumentation or reasoning that seeks to explore and resolve contradictions or opposing viewpoints. We let the two LLMs participate in the dialectic using AutoGen agents.

Let’s create our first agent:

from autogen import ConversableAgent

agent_1 = ConversableAgent(

name="expert_1",

system_message="""You are participating in a Dialectic about concerns of Generative AI with another expert.

Make your points on the thesis concisely.""",

llm_config={"config_list": [{"model": "gpt-4o-mini", "temperature": 0.5}]},

code_execution_config=False,

human_input_mode="NEVER",

)Code Explanation

- ConversableAgent: This is the base class for building customizable agents that can talk and interact with other agents, people, and tools to solve tasks.

- System Message: The system_message parameter defines the agent’s role and purpose in the conversation. In this case, agent_1 is instructed to engage in a dialectic about generative AI, making concise points on the thesis.

- llm_config: This configuration specifies the language model to be used, here “gpt-4o-mini”. Additional parameters like temperature=0.5 are set to control the model’s response creativity and variability.

- code_execution_config=False: This indicates that no code execution capabilities are enabled for the agent.

- human_input_mode=”NEVER”: This setting ensures the agent doesn’t rely on human input, operating entirely autonomously.

Now the second agent

agent_2 = ConversableAgent(

"expert_2",

system_message="""You are participating in a Dialectic about concerns of Generative AI with another expert. Make your points on the anti-thesis concisely.""",

llm_config={"config_list": [{"api_type": "groq", "model": "llama-3.1-70b-versatile", "temperature": 0.3}]},

code_execution_config=False,

human_input_mode="NEVER",

)Here, we will use the Llama 3.1 model from Groq. To know how to set different LLMs, we can refer here.

Let us initiate the chat:

result = agent_1.initiate_chat(agent_2, message="""The nature of data collection for training AI models pose inherent privacy risks""",

max_turns=3, silent=False, summary_method="reflection_with_llm")Code Explanation

In this code, agent_1 initiates a conversation with agent_2 using the provided message.

- max_turns=3: This limits the conversation to three exchanges between the agents before it automatically ends.

- silent=False: This will display the conversation in real-time.

- summary_method=’reflection_with_llm’: This employs a large language model (LLM) to summarize the entire dialogue between the agents after the conversation concludes, providing a reflective summary of their interaction.

You can go through the entire dialectic using the chat_history method.

Here’s the result:

len(result.chat_history)

>>> 6

# each agent has 3 replies.

# we can also check the cost incurred

print(result.cost)

# get chathistory

print(result.chat_history)

# finally summary of the chat

print(result.summary['content'])

Interview Preparation Chatbot

In addition to making two agents chat amongst themselves, we can also chat with an AI agent. Let’s try this by building an agent that can be used for interview preparation.

interviewer = ConversableAgent(

"interviewer",

system_message="""You are interviewing to select for the Generative AI intern position.

Ask suitable questions and evaluate the candidate.""",

llm_config={"config_list": [{"api_type": "groq", "model": "llama-3.1-70b-versatile", "temperature": 0.0}]},

code_execution_config=False,

human_input_mode="NEVER",

# max_consecutive_auto_reply=2,

is_termination_msg=lambda msg: "goodbye" in msg["content"].lower()

)Code Explanation

Use the system_message to define the role of the agent.

To terminate the conversation we can use either of the below two parameters:

- max_consecutive_auto_reply: This parameter limits the number of consecutive replies an agent can send. Once the agent reaches this limit, the conversation automatically ends, preventing it from continuing indefinitely.

- is_termination_msg: This parameter checks if a message contains a specific pre-defined keyword. When this keyword is detected, the conversation is automatically terminated.

candidate = ConversableAgent(

"candidate",

system_message="""You are attending an interview for the Generative AI intern position.

Answer the questions accordingly""",

llm_config=False,

code_execution_config=False,

human_input_mode="ALWAYS",

)Since the user is going to provide the answer, we will use human_input_mode=”ALWAYS” and llm_config=False

Now, we can initialize the mock interview:

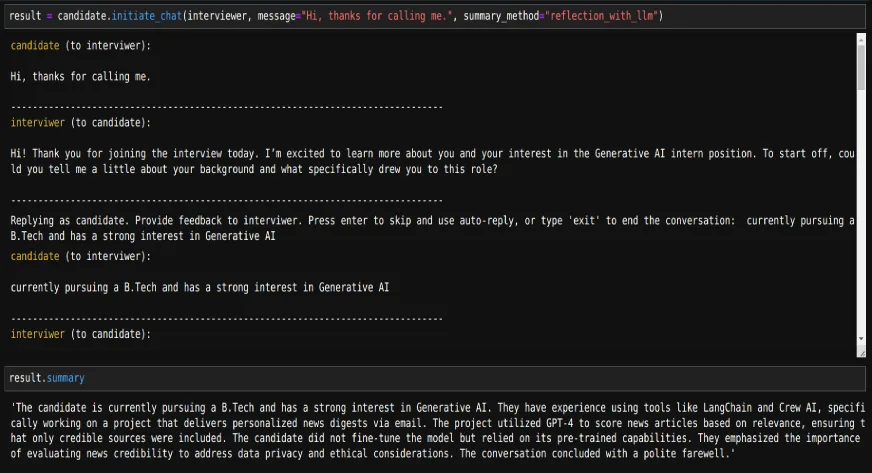

result = candidate.initiate_chat(interviewer, message="Hi, thanks for calling me.", summary_method="reflection_with_llm")

# we can get the summary of the conversation too

print(result.summary)

Chat with Web Search

Now, let’s build a chatbot that can use the internet to search for the queries asked.

For this, first, define a function that searches the web using Tavily.

from tavily import TavilyClient

from autogen import register_function

def web_search(query: str):

tavily_client = TavilyClient()

response = tavily_client.search(query, max_results=3)

return response['results']An assistant agent which decides to call the tool or terminate

assistant = ConversableAgent(

name="Assistant",

system_message="""You are a helpful AI assistant. You can search web to get the results.

Return 'TERMINATE' when the task is done.""",

llm_config={"config_list": [{"model": "gpt-4o-mini"}]},

silent=True,

)The user proxy agent is used for interacting with the assistant agent and executes tool calls.

user_proxy = ConversableAgent(

name="User",

llm_config=False,

is_termination_msg=lambda msg: msg.get("content") is not None and "TERMINATE" in msg["content"],

human_input_mode="TERMINATE",

)When the termination condition is met, it will ask for human input. We can either continue to query or end the chat.

Register the function for the two agents:

register_function(

web_search,

caller=assistant, # The assistant agent can suggest calls to the calculator.

executor=user_proxy, # The user proxy agent can execute the calculator calls.

name="web_search", # By default, the function name is used as the tool name.

description="Searches internet to get the results a for given query", # A description of the tool.

)Now we can query:

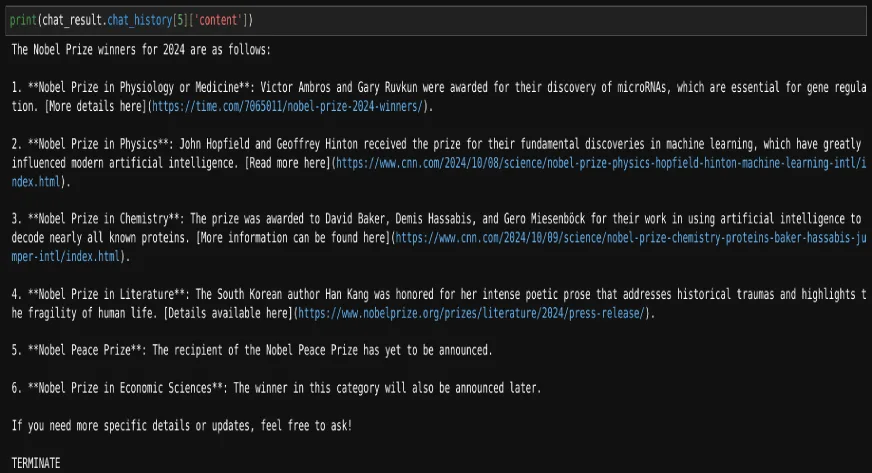

chat_result = user_proxy.initiate_chat(assistant, message="Who won the Nobel prizes in 2024")

# Depending on the length of the chat history we can access the necessary content

print(chat_result.chat_history[5]['content'])

In this way, we can build different types of agentic chatbots using AutoGen.

Also Read: Strategic Team Building with AutoGen AI

Conclusion

In this article, we learned how to build agentic chatbots using AutoGen and explored their various capabilities. With its agent-based architecture, developers can build flexible and scalable bots capable of complex interactions, such as dialectics and web searches. AutoGen’s straightforward setup and tool integration empower users to craft customized conversational agents for various applications. As AI-driven communication evolves, AutoGen serves as a valuable framework for simplifying and enhancing chatbot development, enabling engaging user interactions.

To master AI agents, checkout our Agentic AI Pioneer Program.

Frequently Asked Questions

A. AutoGen is a framework that simplifies the development of chatbots by using an agent-based architecture, allowing for flexible and scalable conversational interactions.

A. Yes, AutoGen supports various conversation patterns, including sequential and group chats, allowing developers to tailor interactions based on their needs.

A. AutoGen utilizes agent-to-agent communication, enabling multiple agents to engage in structured dialogues, such as dialectics, making it easier to manage complex conversational scenarios.

A. You can terminate a chat in AutoGen by using parameters like `max_consecutive_auto_reply`, which limits the number of consecutive replies, or `is_termination_msg`, which checks for specific keywords in the conversation to trigger an automatic end. We can also use max_turns to limit the conversation.

A. Auogen allows agents to use external tools, like Tavily for web searches, by registering functions that the agents can call during conversations, enhancing the chatbot’s capabilities with real-time data and additional functionality.